Introduction

Over the past two decades, more and more cameras have found their way into automobiles, and today, driver assistance systems have become indispensable in modern cars. When contemplating self-driving vehicles, many people envision a vehicle where one gets in and is brought to the destination while using the drive time for other things, such as working, watching television, or simply sleeping. To make this vision a reality, the sensor systems in the vehicles must function reliably regardless of the time of day, weather conditions, lighting, and road conditions.

This post illustrates how the requirements for self-driving vehicles have proven to be a great challenge for the system manufacturers while also looking at the attempts being made to master these challenges.

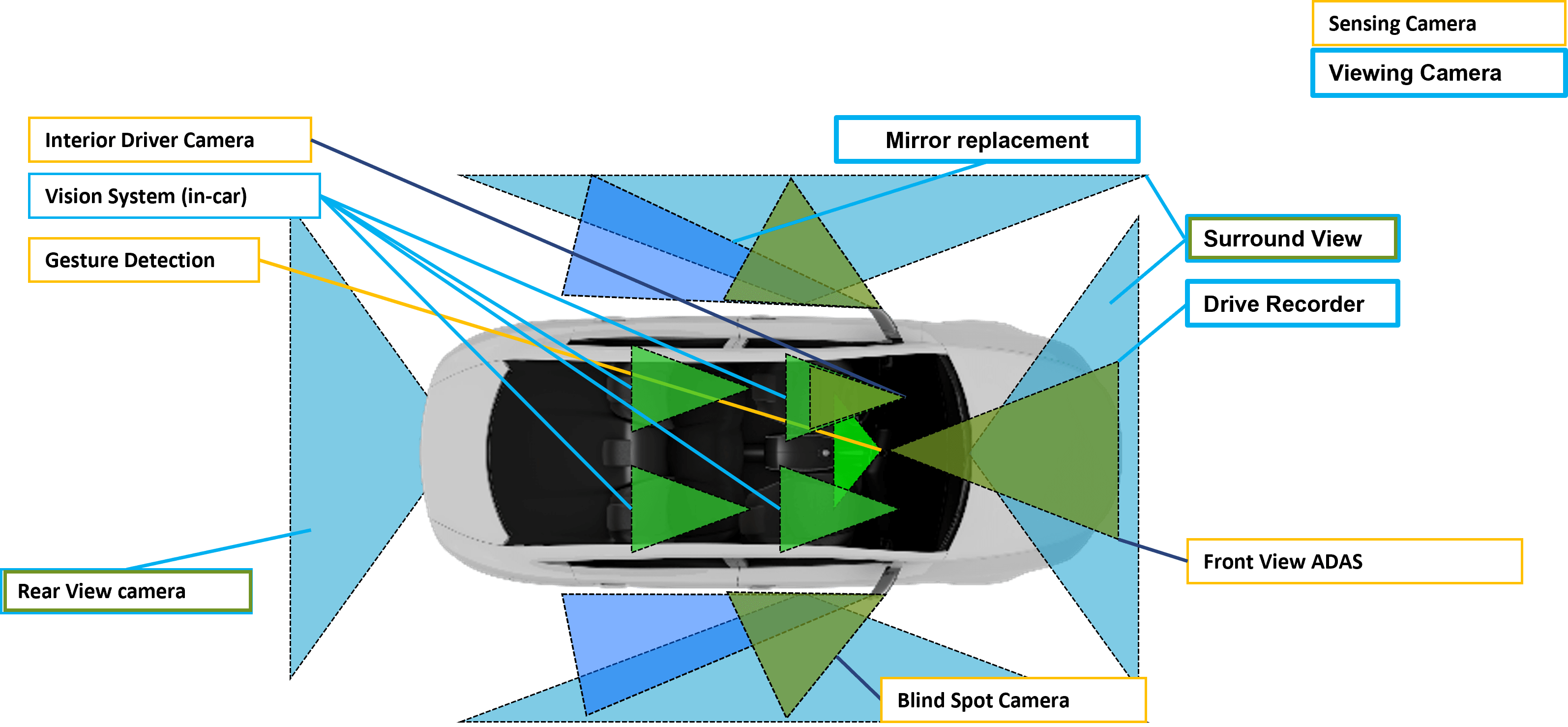

Cameras in automobiles

How are cameras utilized in automobiles? Currently, it is not uncommon to find more than 30 cameras serving different purposes in luxury class vehicles. These include:

- Rearview cameras

- Cameras for all-around vision

- Cameras that replace the rearview mirror

- Driver analysis (for vehicle control and attention monitoring)

- Lane detection

- Traffic sign recognition

- Distance warning system

- Adaptation of the light system (e.g., high beam)

- Night Vision

Challenges for automobile camera systems

Depending on the application, the cameras face a variety of challenges. The main challenges include:

- Poor lighting conditions (night, dams, tunnels, parking garages)

- Extreme contrasts (direct sunlight, brightly lit areas at night)

- Poor weather conditions (rain, fog, snow)

- Contamination of the cameras

- Complexity of scenes

Cameras for the light system need a high dynamic range and must be able to recognize colors.

Cameras for lane detection need a high dynamic range, high sensitivity, and must be able to recognize colors.

Cameras for traffic sign recognition require high sensitivity, short exposure times, high resolution, and must recognize colors.

Cameras for object recognition must have high sensitivity and high resolution, and they must see in three dimensions (stereo).

Examples of challenges

Contamination

Contamination of cameras is a constant challenge and one that manufacturers attempt to solve with, for example, appropriate covers that only open when necessary or by positioning the cameras behind windshield wipers.

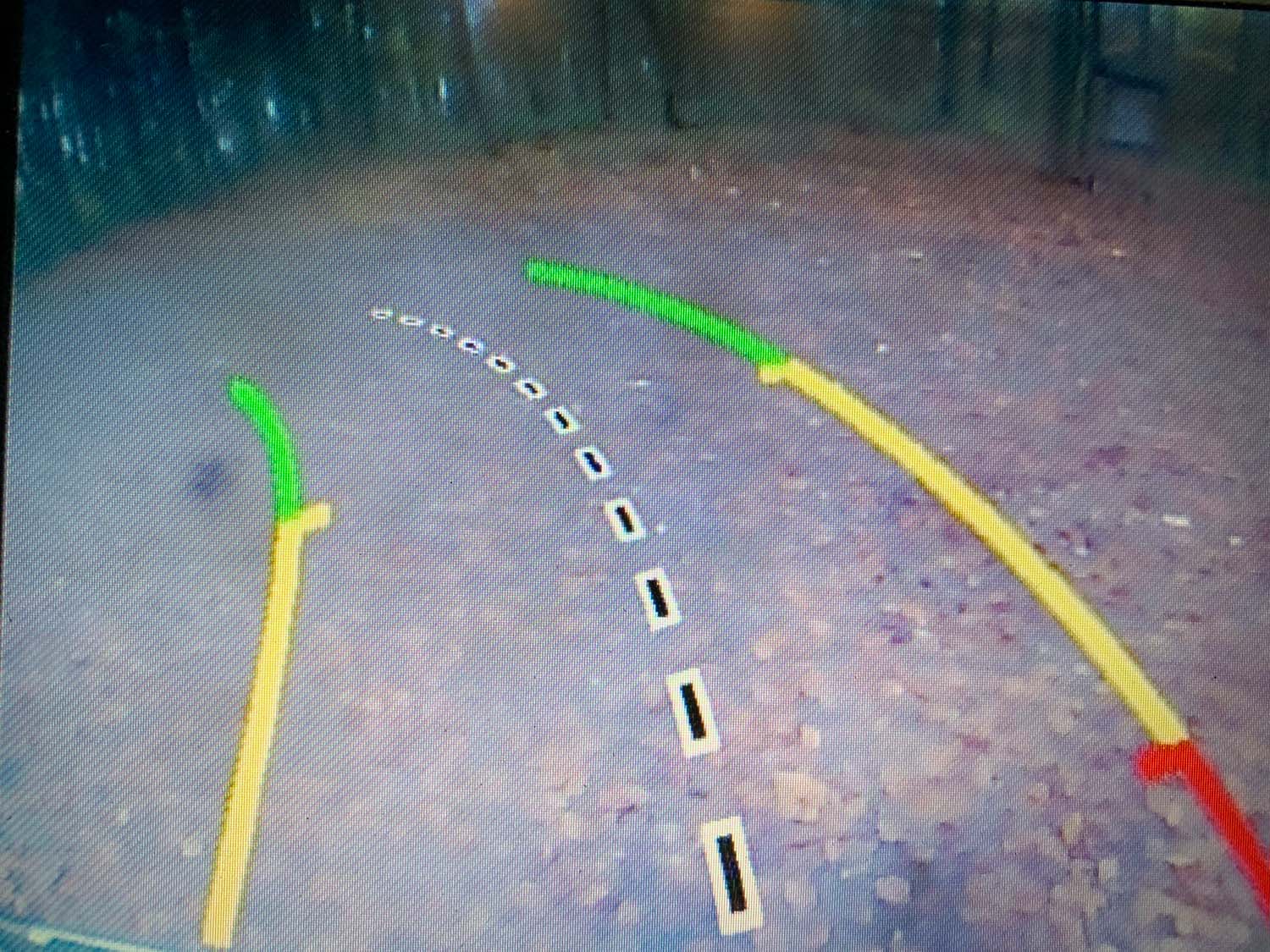

Lane detection

Many structures around vehicles often appear to look like lane markings. Recognizing the correct lane markings is one of the biggest challenges for autonomous vehicles. In addition, regional differences, different colors, and weather conditions make recognition difficult.

Light assistance

Light assistance systems face the difficult challenge of distinguishing oncoming and preceding vehicles from other objects in the environment. Moving objects must be distinguished from static objects (street lighting, reflectors, etc.). Cars with two parallel lights are easy to recognize. But they must also be detected if one light is defective or if the lights are pulsed. Motorcycles must also be seen.

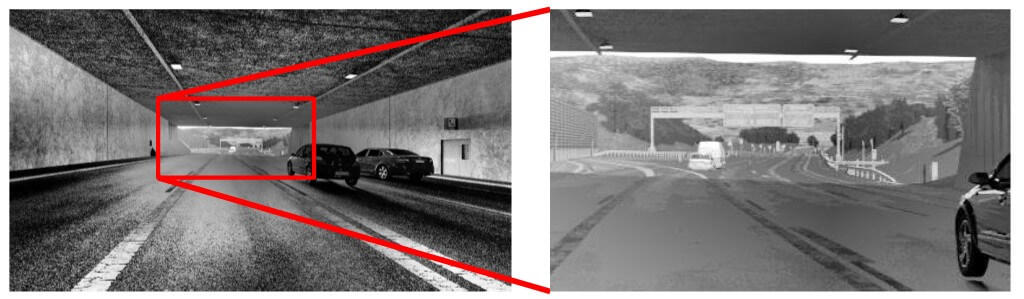

High dynamic range

When the sun is low, and we are driving directly towards it, we fold down the sun visors, but even then, conditions arise in which we cannot see objects with our eyes. That's why such situations lead to accidents time and again. Cameras face the same challenge, but this is also evident at the end of tunnels and numerous other everyday cases.

Flicker

There are many artificial light sources from street lighting, traffic signs, and traffic lights that vehicles encounter in road traffic. Almost all modern light sources are LED-based, and they flicker, meaning the light sources are pulsed and flicker with unknown and different frequencies. This situation is a challenge for vehicle cameras, which continuously produce images for analysis (basically videos). In one photo, a traffic sign lights up; in the next, it has disappeared.

Resolution

In moving vehicles, images are taken continuously and in rapid succession to capture the changes in the environment. This process limits the resolution of the system's ability to handle the amount of data. If the cameras are then dirty, do not focus properly, or the focus has shifted due to the influence of temperature, problems quickly arise, such as the recognition of traffic signs.

Stray light

Not only can high or low contrasts cause issues, but specific characteristics of a camera system can also lead to problems. High contrasts, in particular, experience issues from stray light, i.e., unwanted reflections and the scattering of light in the lens or camera housing. Additional problems can occur in front of the camera due to fog, haze, or smog.

Weather and lighting conditions

Cameras must operate day and night, at all temperatures (-40 to 100° C), and in all weather conditions. These conditions naturally pose significant challenges. We have all experienced image noise in low light conditions with our cell phones. This image noise doubles on average with a 7°C increase in temperature, which can quickly cause issues even in good lighting conditions.

Regional differences

For many objects, it's also necessary to identify and separate the regional differences. For example, highway signs use blue signs in France and Germany, and country roads are marked green in France. In Switzerland, it's the other way around, highways are green, and country roads are blue. Road markings in the USA are mixed between white and yellow depending on the type. In Germany, the usual road markings are white, and construction site markings are yellow. In Austria, however, construction site markings are orange.

An infinite number of objects

No matter how hard you try to classify and assign objects, the attempt will always fail due to the sheer number of different things in infinite shapes and sizes. What's more, the objects will also change from their viewing perspective.

The limits of cameras

Cameras reach their limits in three specific areas that cannot be exceeded with the current state of technology.

- Distance measurements of objects at long distances (> 20 m)

- Seeing in fog and rain

- Seeing in low-light conditions

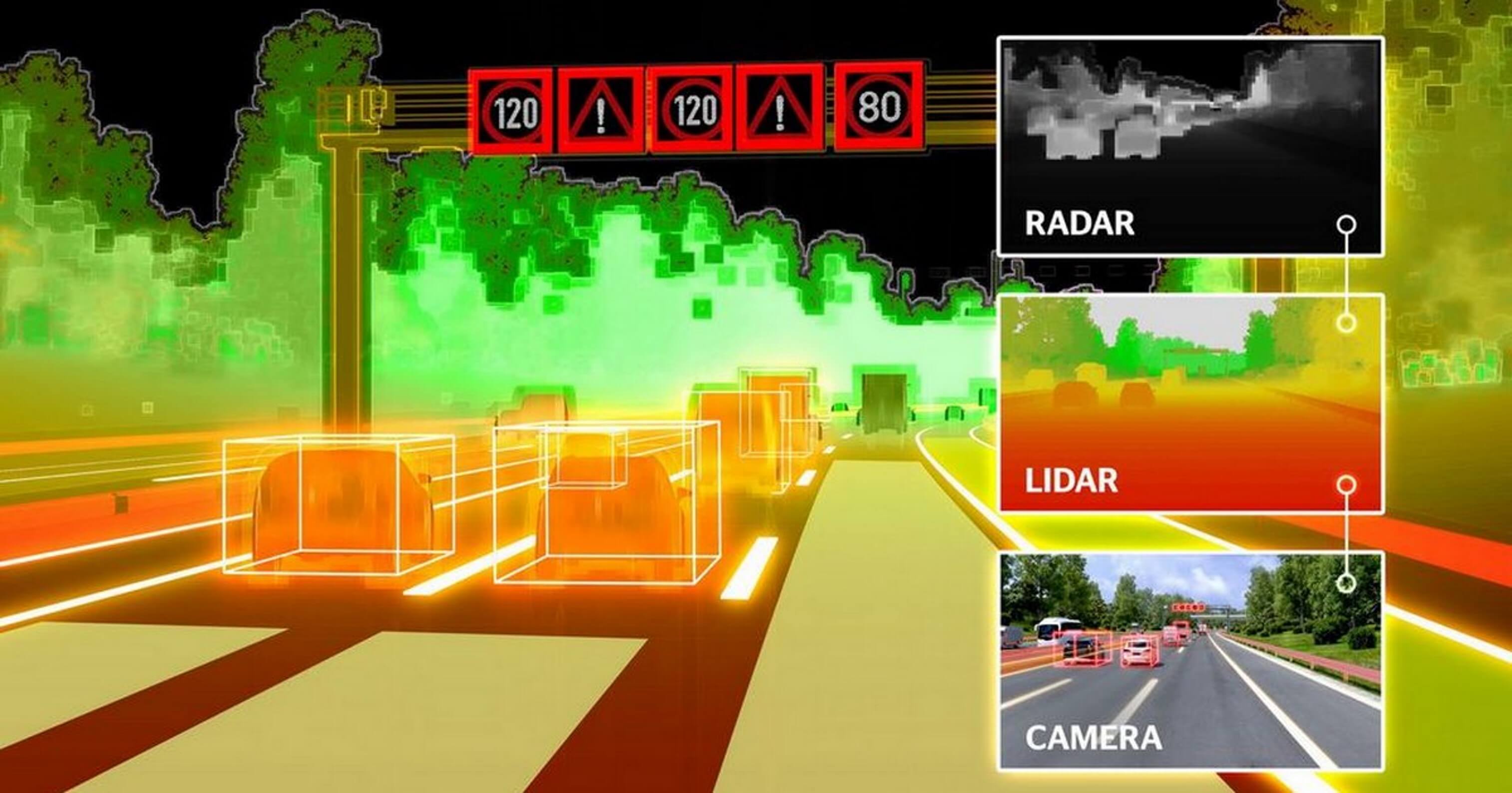

Additional sensors are installed in vehicles with high automation to combat these limitations.

- LIDAR (distance measurements)

- RADAR (distance measurements and unaffected by rain and fog)

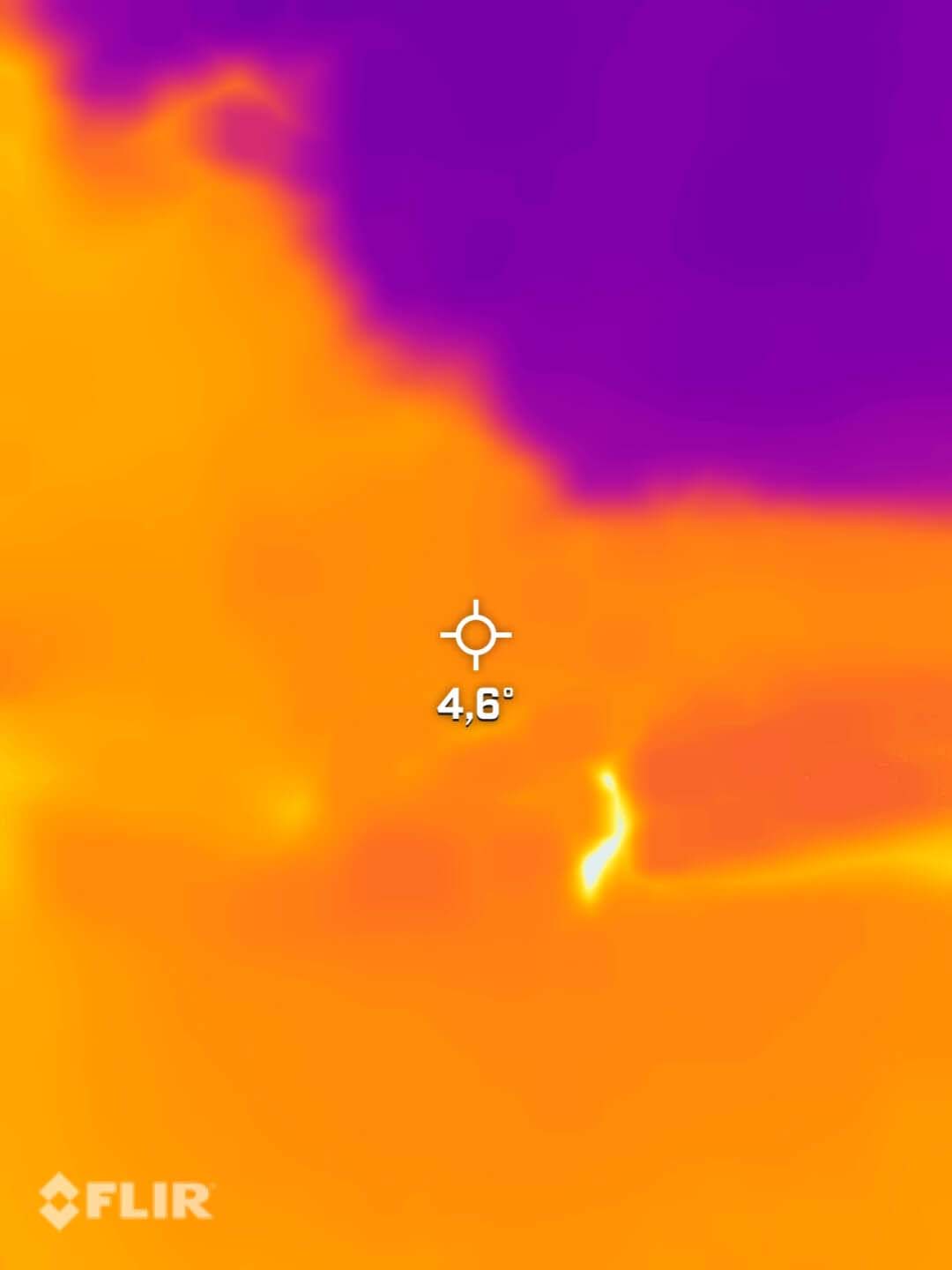

- Thermal imaging cameras (detection of living and heated objects)

The radar sensors, in particular, work well in rain and fog.

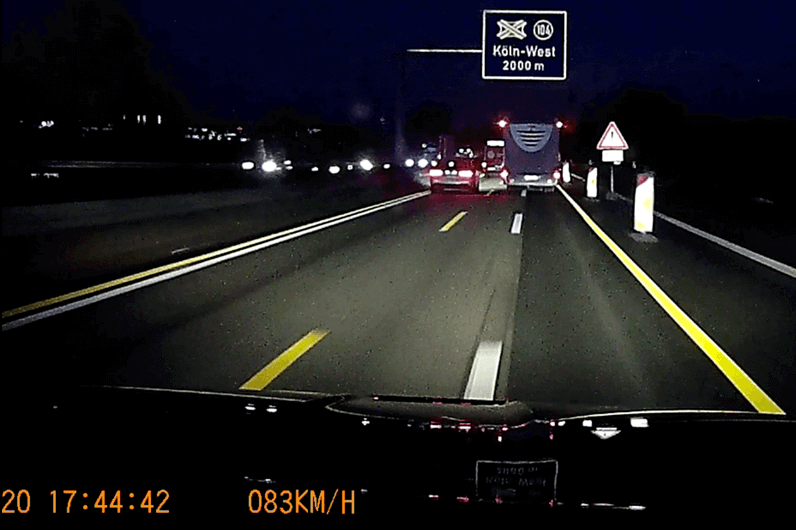

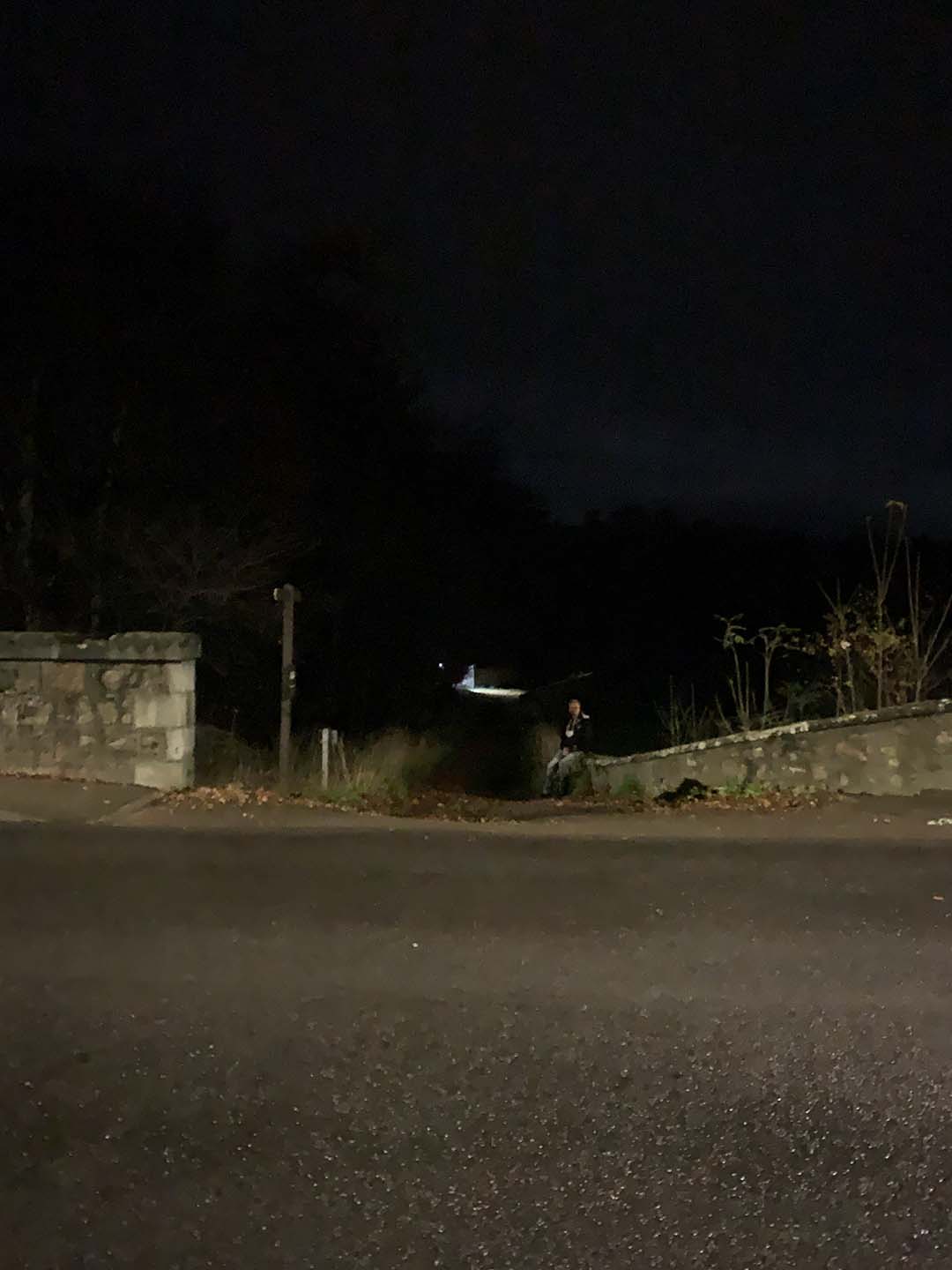

One example of the limitations of camera systems is night vision. As shown the image below, cameras have difficulty detecting objects in dark areas at night when using the standard exposure times.

If a thermal imaging camera is added to the system, these objects become much more visibly clear.

Solutions to the challenges

The solution to these challenges is a three-tiered system.

- Camera systems have made significant improvements in recent years, but development continues to improve the camera system process to ensure all vital information is seen within the images. The system cannot detect anything that is not in the images.

- Once all systems are installed, they must coordinate and calibrate to function as one unit, and the synergetic effects become apparent in the evaluation.

- It is crucial to continue the development of intelligent evaluation algorithms, including artificial intelligence and neural networks, to improve the recognition of objects and functionality in complex situations.

Optimization of camera systems

HDR

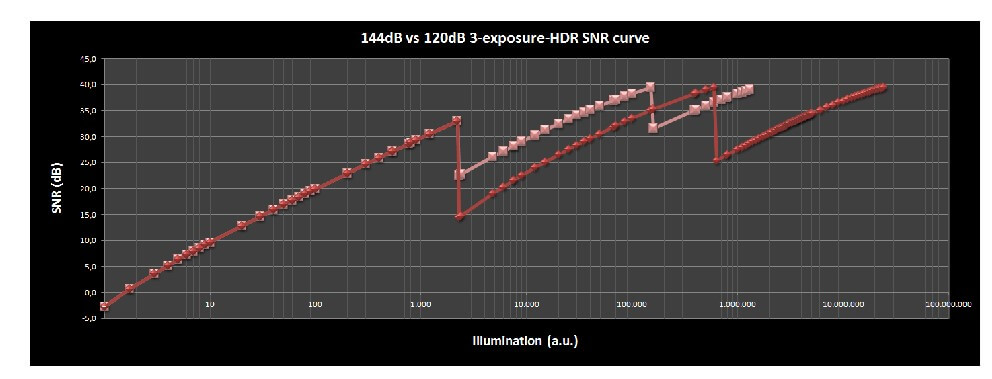

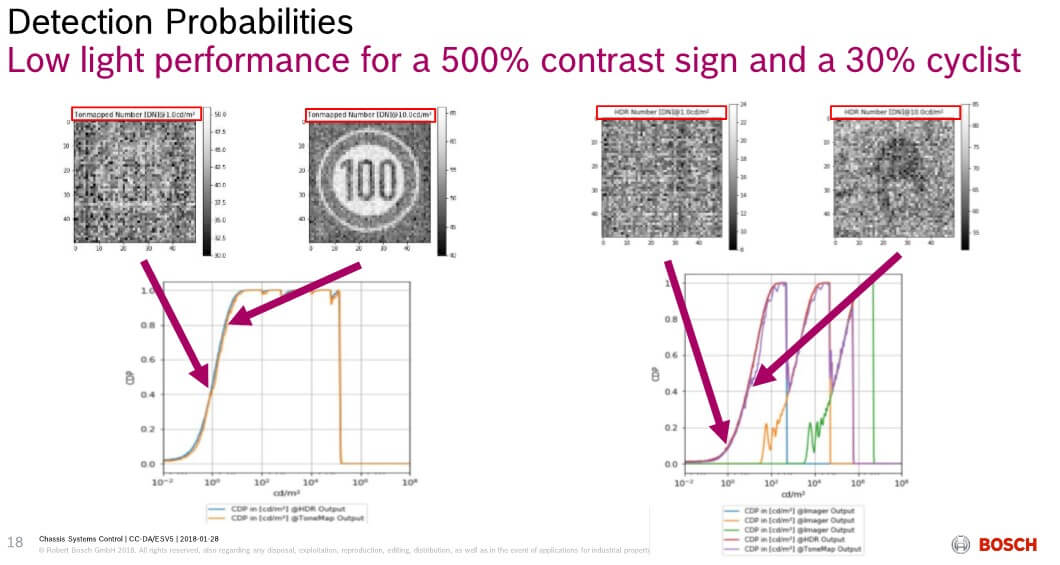

Camera systems in today's vehicles generally have HDR (high dynamic range) capabilities that are technologically implemented in different ways. One example is using pixels on the sensor with different sensitivity or gain. In this case, the various pixels that lie next to each other are combined into one pixel. As a result, two exposures are created simultaneously and combined into one image, and thus, a high contrast range can be recorded.

However, such an HDR recording also has issues due to the tonal values on the output side that need to be packed into a given color depth (number of bits). This occurrence is why the end of the tunnel is not always brighter. Instead, the image is "modeled" into the white area, called "tone rendering."

As a result of these interplays, new, extended algorithms had to be developed to describe the camera systems' properties better. For example, contrast transfer accuracy (CTA) [1] indicates whether a particular contrast between the object and background can be detected.

Flare

In terms of optimizing flare, manufacturers can use special, optimized anti-reflective coatings on the lenses and high-quality absorbing materials in the mounts. The lens design allows you to minimize the air-glass transitions and thus the areas where reflections can occur.

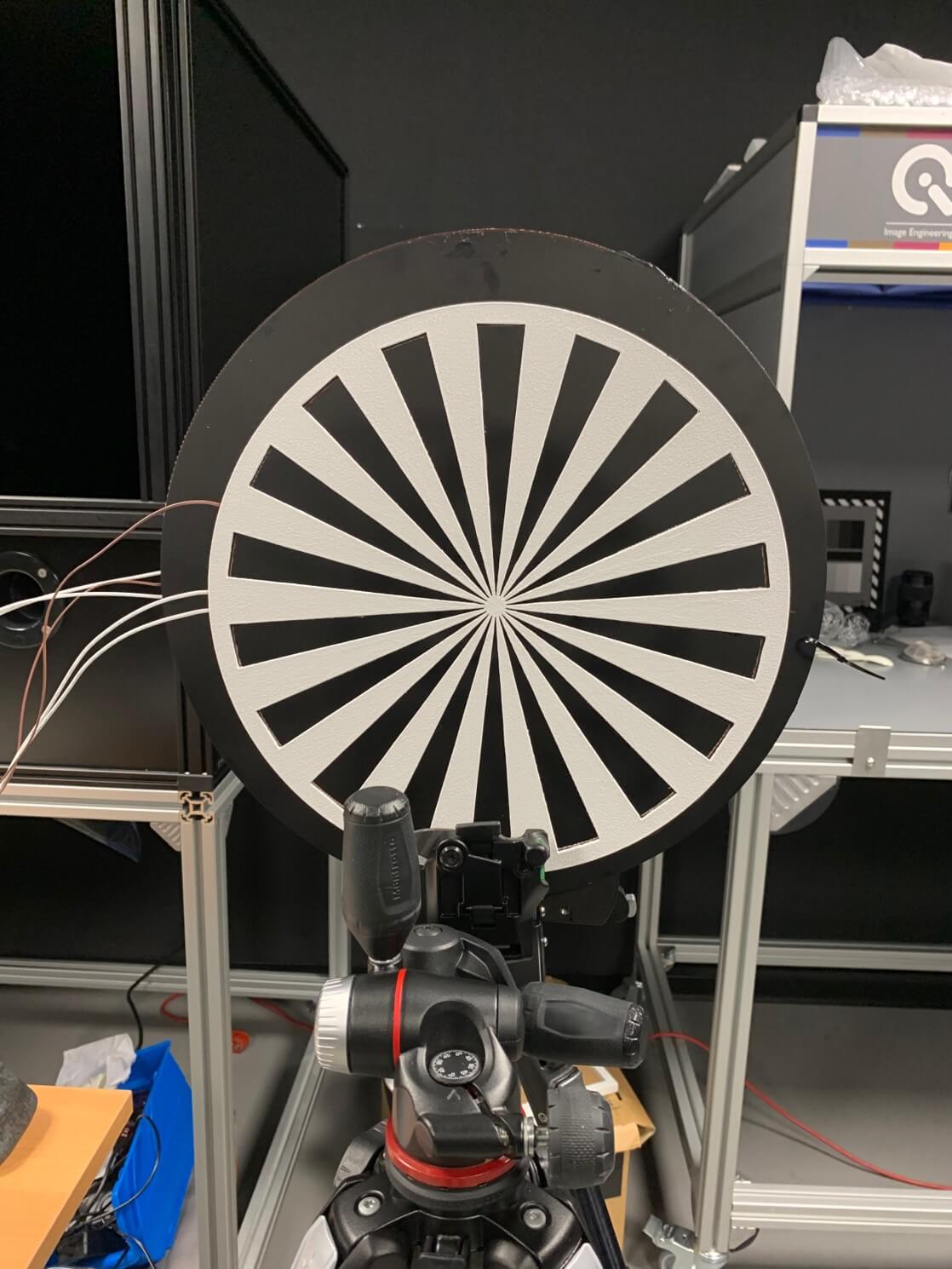

Distortion

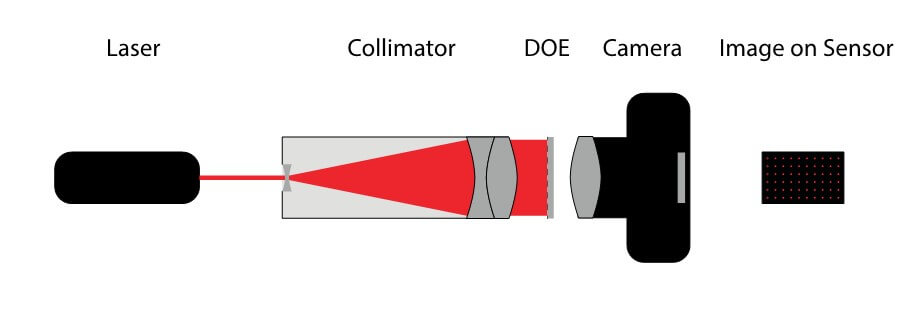

The images must be free of distortion for distance measurements and the interaction in front of stereo cameras. For this purpose, the distortion can be characterized and then calculated out. A test method initially developed for space-based earth observation cameras has been modified to characterize cameras with a large angle of view at infinity.

This method comprises a beam expanded laser that illuminates a diffractive optical element (DOE). This setup generates a defined pattern of points, virtually from infinity, recorded by the camera. Analysis software then uses this image to determine the parameters needed to rectify the image.

This method can also be used to characterize the influence of a windshield. The algorithms for calculating the effect are still under development.

Climate

Another significant issue is optimizing image quality at different temperature levels. If a camera is in the area behind the windshield, it can easily reach 80°C or more during the summer. In winter, the cameras must still function even at extreme temperatures of -40°C. These scenarios are achieved by carefully selecting the camera materials. The camera needs to be tested in extreme temperatures using a climate chamber to obtain the desired results. The camera is placed inside the climate chamber, and then once the temperature is set, it can capture test images through a portal window.

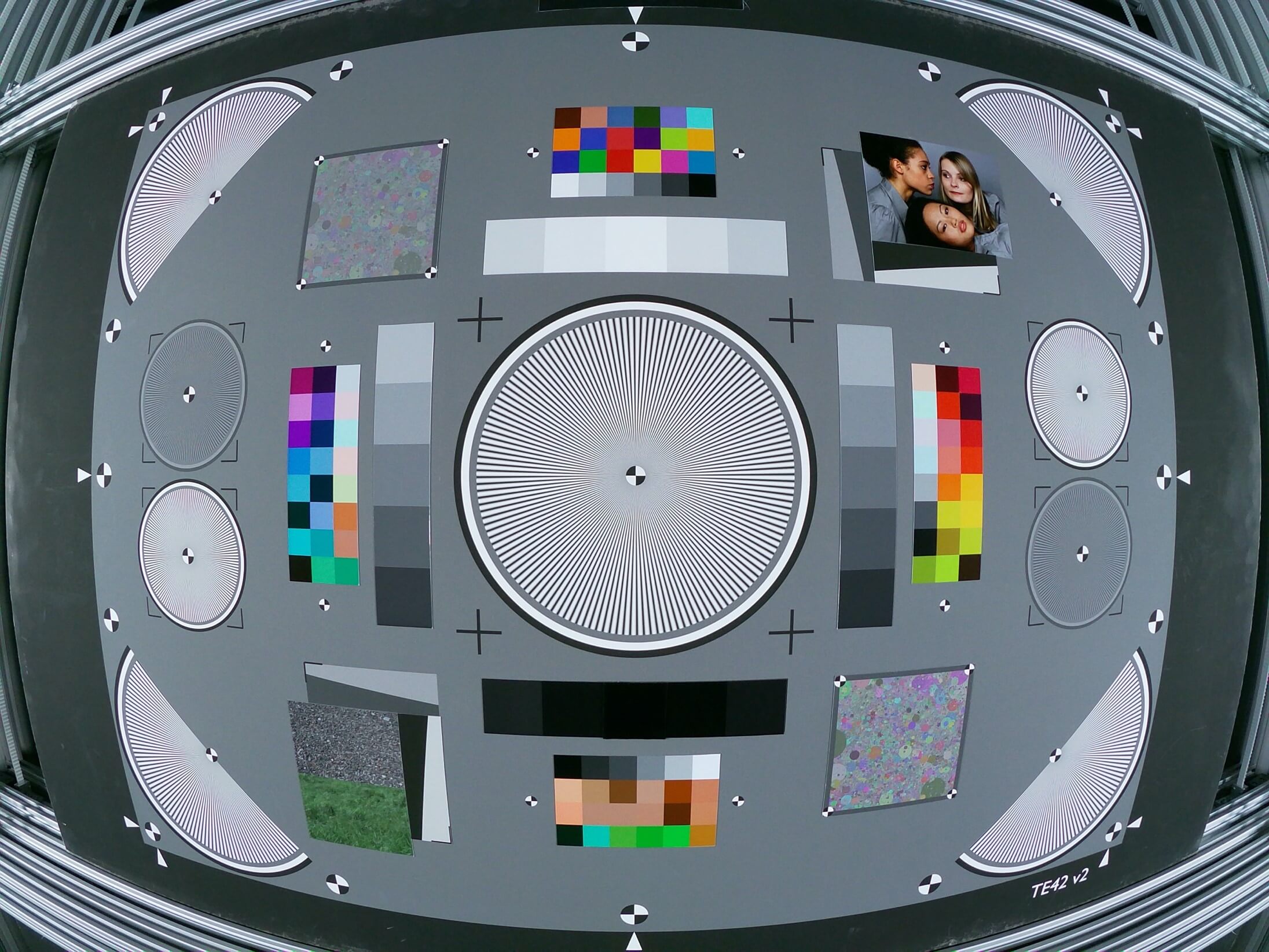

Image quality in general

The image quality aspects described above have been selected to illustrate the efforts required to ensure that all driving assistance and autonomous systems are safe.

In addition to the above examples, several other parameters need to be optimized and further developed to ensure that cameras deliver the high-quality necessary before installation in vehicles. These include:

- Measurement of OECF (Opto Electronic Conversion Function)

- Noise

- Resolution, including spherical aberrations

- White balance

- Edge darkening in intensity and color

- Chromatic Aberration

- Stray light

- Color reproduction

- Defective pixels and inclusions on the sensor

- Flicker

Calibration

Calibration is vital for camera systems, and even the best cameras will not deliver valuable results without proper calibration. During calibration, you will determine the defective pixels, calculate them, characterize and compensate for the light fall-off at the edge, and detect the distortion and adjust it back. Further, the exposure must be cleanly set, the white balance and the color reproduction must be changed, and finally, the camera must be mounted and properly aligned.

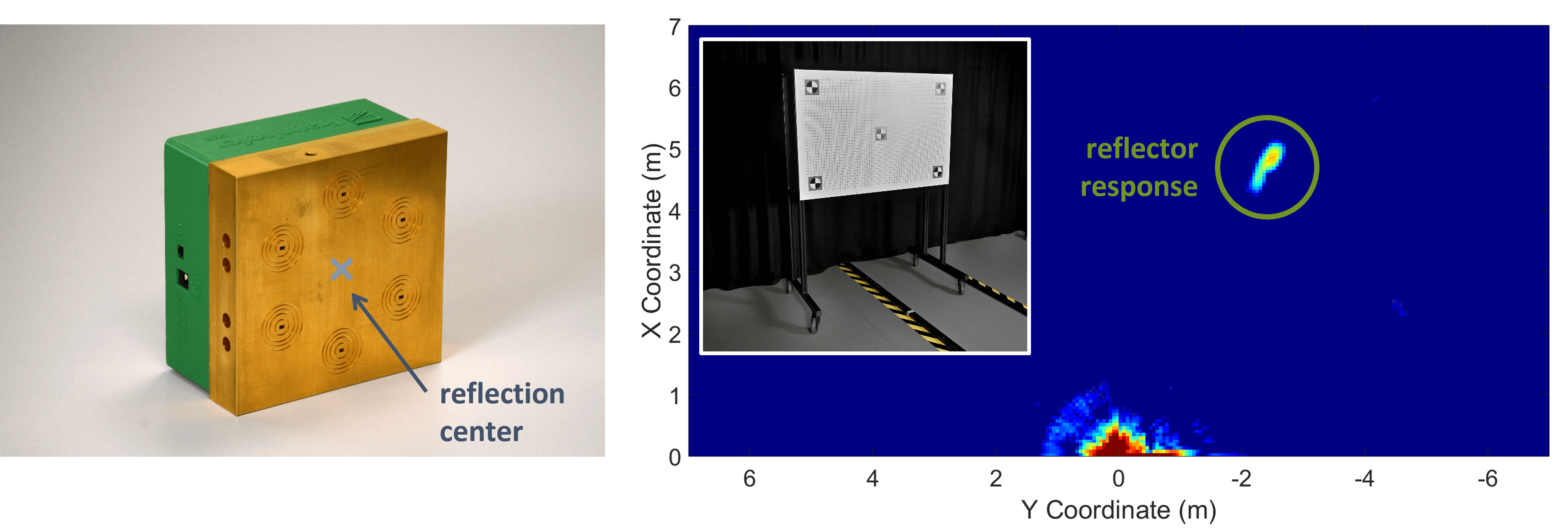

The alignment concerns cameras to the vehicle and cameras to each other, e.g., for the devices whose images are evaluated together for the "surround view." In addition, it is also essential that all the sensors are correctly aligned. For example, the radar system must be aligned with the camera to look in the same direction when detecting objects. The radar-reflecting objects can then be assigned to the visual objects.

Object recognition

In the past few years, new algorithms have been developed to improve the recognition of objects. Combining these algorithms with artificial intelligence and neuronal networks training has led to better and more reliable results.

Here are a few keywords for the solutions that have been developed: Object properties such as edges, corners, lines, circles, etc., are used for detection. Pedestrian detection uses histogram-based gradients. Hough transformations are utilized to detect geometric objects such as lane boundaries and traffic signs. Light assistance systems use segmentation and color analysis. Furthermore, object tracking, classification, environmental models, and 3D analyses are also utilized.

Conclusion

Driver assistance camera systems are already leading to a significant reduction in fatal accidents. In Europe, the number fell by 25% on average between 2010 and 2017 (Statista). This trend will continue as more and more cars are equipped with such systems and as these systems continue to develop and improve.

Under reasonable weather conditions, driverless vehicles drive quite reliably through busy cities such as San Francisco. However, this reliability must also stay true under all weather conditions and without the cars going to the calibration garage every day. With that in mind, we are still around ten years away from the real-world, widespread use of self-driving vehicles. Nonetheless, it will almost certainly become a reality in the future.

Acknowledgments

We want to thank our partners at Continental AG and Bosch GmbH for providing graphics and images.